|

Table of Content Volume 17 Issue 2 - February 2021

A database for facial expressions among Indians

Arunashri1*, Venkateshu K V2, Lalitha C3

1Tutor,3Professor & HOD, Department of Anatomy, Kempegowda Institute of Medical Sciences, Bengaluru 560070, Karnataka, INDIA. 2Professor, Department of Anatomy, Sri Devaraj URS Medical College, Tamaka, Kolar 563101, Karnataka, INDIA. Email: drarunashri14@gmail.com, venkkodi1991@gmail.com, lalithahal@yahoo.co.in

Abstract Background: Face is the most presented part of the human body in all interactions of our life. Face helps in identification of a person in expressing our interest verbally and non-verbally. Facial expression even though considered universal, there are substantial differences among different population across the globe. Databases on basic facial expressions are very limited among Indians. Material and methods: Study was reviewed and ethically approved by Institutional Ethical Committee. Participants were selected by stratified random sampling from different zones of India. 112 participants were thus selected; informed consent was collected. Participants expressions were evoked by showing validated emotionally valent videos. Then responses were recorded and then classified, analyzed and tested statistically. Results: There was significant difference in the expression of fear, anger, disgust, contempt, sadness and surprise among participants. Happiness was universally similarly expressed and welcomed expression among the basic expressions. Finally, a database for facial expression is compiled for facial expressions among Indians [IFED]. Conclusion: The Database will be made available and is of great utility for clinicians in the fields of case-taking for Psychiatrists, psychologists, counsellors, plastic surgeons to assess aesthetic reconstruction surgeries, researchers in the field of anatomy, dentistry and machine learning, teachers in assessment of students, criminal interrogation. It helps in recognizing different non-verbal communication cues that makes management of the human interactions more effective. Key words: Face, Facial expression, Database, Indians.

INTRODUCTION Face is the primary focus of an individual during any interaction, health care, business, family and social interactions. etc. This happens because of the ability of face to show expressions on the face. Expressions are possible because of underlying muscles of the face around three apertures- eyes, nose and mouth. Muscles contract and relax to bring about various expressions on the face. These muscles are collectively called muscles of facial expressions1. Face, the index of mind consists of sense organs which communicate with the outer environment. All special sense organ receptors are on face and general sense organ all over.1. Face of a human in addition to being a part of gastrointestinal tract, respiratory tract, expresses emotions with the help of facial muscles which accounts to his or her personality as a whole.2. Facial expression accounts for the non- verbal communication and is crucial for understanding true intentions of a being. Facial muscles are bringing about expression of sadness, surprise, anger, contempt, fear and disgust. Paul Ekman and Friesen worked on the facial expressions and face muscles are coded into Action units---Muscles tightening the eyelid, relaxing eyelid, Blink, Wink, likewise. Lip corner puller, Nose dilator, compressor, etc9 Paul Ekman and Freisen developed facial expression coding system[FACS] after study on humans from various regions around the world9 in 1976 and 1978. Basic expressions are Anger, Contempt, Disgust, Fear, Happiness, Surprise, Sadness. Seven expressions are considered as basic and the combination of these give rise to many other emotions. There has been a continued work on the facial expression and analysis by his team over last 5 decades.9. Presently, Paul Ekman company involved with the detection of lie and micro expressions.

1.1 Previous database: The available databases on facial expressions are – Comprehensive Database for facial expression Analysis consisting of 486 videos by Cohn-Kanade and co-workers[10] MMI Facial expression Database in 2002 containing 2900 videos of 75 subjects,8 Japaneese Female Facial Expression Database [JAFFE] with 230 static images,8 Belfast Naturalistic Database with 250 images/videos by Queen’s University of Belfast, EmotiW(2014) dataset by Second Emotion recognition in the Wild challenge and Workshop10. Indian Database on facial expressions are very limited in number. IMFD- Indian Movie Face Database developed from Indian movies10. Contains 34512 facial images extracted from 67 male and 33 female actors at-least 200 images of each actor. Another available database is of IIT Kharagpur- Indian Spontaneous Expression Database [2016]. This contains images of 50 subjects from different parts of India.13. There are hardly any database available from Indian population which is having all basic expressions. The present study is focused on the evoked facial expressions among Indians from 112 subjects for all 7 basic expressions namely anger, contempt, disgust, fear, happiness, sadness and surprise. 1.2 Review of Literature: Facial expression recognition and analysis dates back to the Aristotelian era (4 BC). A detailed note on the various expressions and movement of head muscles was given by John Bulwer in 1649 in his book “Pathomyotomia”12. Another interesting work on facial expressions and Physiognomy was by Le Brun, French academician and Painter. In 1668, Le Brun gave a lecture at the Royal Academy of Painting which was later reproduced as a book in 173417. It is interesting to learn that 18th century actors referred this book The Expressions of Passion in order to achieve perfection in their work.5. Further, facial expression that has relation to present day automatic facial expression analysis was done by Charles Darwin in his book in 1872. He identified expressions and grouped similar emotions into single group.5. In 1862, Duchenne, French neuroanatomist, published his book “Mechanisme de la physionomie humanie ou analyse electro physiologue de’l expression des passions” where he has utilized electrical current for contraction of muscles of facial expression and has delineated the group of muscles acting together to elicit an expression.5 Darwin in his book “The Expression of the Emotions in Man and Animals” has argued that Facial Expressions are universal and innate in characteristics.3. Several workers like Klineberg (1940), La Barre (1947), Birdwhistell (1963) in the field also have argued that the facial expressions are also equally influenced by ethnicity, cultural and social background of the individuals. And there is no universal innate language of expression.6 Later in 20th Century, a good amount of work on facial expression recognition, analysis was done by Paul Ekman and Wally Friesen (1976,1978). He has established a facial expression coding system(FACS) for recognizing the expression based on anatomical muscles bringing about the action.4. FACS was developed to in order to allow researchers to allow measure the activity of facial muscles from video images of the face. Ekman P. and W. Friesen defined 46 distinct action units, each of which correspond to activity of a group of muscles or single muscle to elicit a single facial expression.4. The basic expressions defined with action units were sadness, happiness, anger, surprise, fear, and disgust. There are thousands of expressions which can be expressed on face with smaller differences and similarities. They are referred as micro expressions which hardly last for milliseconds to few seconds at large.5. Ekman Facial Expression Coding System [FACS] is a muscle-based approach to create facial parameters.8. FACS consists of action units, including those form head movements and eye positions. Thirty of these are related to anatomically contracting muscle units to bring about an expression. 12 action units in upper face and 18 action units in the lower face. Action units can act singly or in combination. Ann M Kring’s Facial Expression Coding System(FACES) also provides information about frequency, intensity, valence and duration of facial expressions elicited by video clips to result in evoked expression8. Notarious and Levenson (1979), defined expression as any change in the face from a neutral display (i.e., no expression) to a non-neutral display. Next coders rate the valence of the expression (positive or negative), duration and intensity of expression detected. (Ann M. Kring). Facial Expression is produced by facial muscles which are inserted to skin of the face. The emotion expressed is a result of combination of facial muscle contraction in and around the eyes, nose and oral cavity. Expression displayed do represent an emotion. They can be categorized into mild, moderate to high emotion. There is shape transformation of eyebrows, eyes, nasal aperture and mouth in attempt of expressing an emotion. With assessing this shape transformation, quantification of expression into neutral to highest expression can be assessed.3. Action Units (AU) can be additive or non-additive. AUs are said to be additive if the appearance of each AU is independent and the AU are said to be non-additive if they modify each other’s appearance.5. Each expression can be a combination of one or more additive or non-additive action units AUs. For E.g., Fear can be a combination of AUs 1, 2 and 26.5. Listed are some of the basic facial expressions listed by Paul Ekman which represent emotions like happiness, fear, anger, sadness, surprise, disgust. There are other observational coding systems that have been developed, many of which are variants of the FACS. Some are Facial Action Scoring Technique (FAST)[5], EMFACS (Emotional Facial Action Coding System), Facial Electromyography (EMG), Affect expressions by Holistic judgement(AFFEX), FACSAID (FACS Affect Interpretation Database) and Infant/Baby FACS.

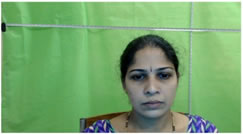

MATERIALS AND METHODS Type of the study: Observational, Qualitative cross sectional Study with sample size of 112 obtained after statistical calculation from previous studies. Participants were Indian subjects aged between 18-40 years who were apparently in good health and capable of expressing facial expressions. Participants were selected by stratified random sampling method. Participants were invited by announcements about the project in various classrooms of the University and college campus of the medical schools. 60% of participants belong to South zone of India, rest 40% belong to east zone, west zone and north zone of India. Method: The intended database was of expressions of evoked type. The participants were shown pre-selected validated emotionally valent videos one after another for each emotion like anger, contempt, disgust, Happiness, surprise, fear and sadness. Each video was of time duration ranging between 17 seconds to 7 minutes dependent on the expression to be elicited. Participants were all given information sheet and requested to read through the sheet and any queries related to the project were answered in the language they are comfortable. Informed consent was then collected. Preliminary data was collected and instructions were given to each participant separately for the experiment. They were instructed to watch the video one after another and express the emotion they felt as they watch the video. Meanwhile, as they watch the video, their face was recorded with the webcam Logitech C920HD with 1080p resolution. The participant was made to sit in-front of the computer which contained validated experimental videos. Experimental set up was well lit with photo studio lights and back-ground was kept darker to decrease reflection of light, capture the expressions better. The room had only the Participant and the investigator during the period of the experiment. The investigator was behind the shadow with another computer recording the expression. The participant was kept at ease and comfort. The investigator did not interrupt in any manner with the participant once the recording/experiment began. After each video was watched, the participant wrote a self-report on the same using a format about the emotion they felt and the valence of the same. The experiment took nearly 30 minutes for each participant to complete the watching and self-reports for each. The Experimental Set up Figure 1: Experimental set up: [Representational; figures reproduced with consent Figure 2: Lateral view of representational for showing the experimental set up. [figures reproduced with consent] The obstacles like spectacles, beard, mustache, hair were not restricted for the participants as they would become more conscious of being recorded. The participants were watching these videos for first time and were not aware of the contents of the video.

RESULTS AND ANALYSIS The Results were the videos obtained for 112 participants for basic seven expressions. These videos were classified under each expression namely Anger, Contempt, Disgust, Fear, Happiness, sadness and surprise. Then the videos were annotated, clipped and these compiled into a database for facial expressions were done by a certified facial expression coder trained from Paul Ekman Company which is a premier institute for training for facial expressions and micro expressions. The videos were scored with action units [AU] involved and finally database was created and uploaded as Indian Facial Expression Database [IFED]. Indian Facial Expression Database [IFED] Contains Anger[343Mb], Contempt, Disgust[658Mb], Fear[164Mb], Happiness[873Mb], Sadness[979Mb], Surprise[262Mb], neutral face[24.5Mb]. Gender wise: Male [ 29 participants]; Female [83 participants]. *Table 1: Details of the videos in the database

Participant response Analysis These responses of the participants were cross-checked with their self-report, coder report and the iMotions software for analysis. The responses were compared statistically. Statistical Analysis Results were analysed with www.SciSat.com and tested with Mc Nemar test for Proportions at 95% confidence interval, happiness emotion showed p value 0.68>0.05, which says there is no significant difference among the expressions felt and analysed among the population. From this, we can conclude that happiness is very much welcome expression among Indians and uniformly easily expressed. We could observe statistically that for contempt, disgust, fear, anger, surprise, sadness- p-value was less than <0.05, and therefore significant. We can infer from above test that these emotions are not easily expressed among the population. Therefore, observation of face and his or her expressions and cues by the clinician during the case taking will be of great utility to improve the patient care.

DISCUSSION Indian Movie Face Database [IMFD] is a collection of Movies actor poses and images for all expressions12. The IFED is a step forward in the field of research on facial expression as the database contains videos for all seven basic expressions in comparison to the ISED14 where the database if restricted to only four of the basic expressions and the sample size of 50. The present Database is showing videos of 112 participants from India and are all classified for seven basic expressions. Database is available online web address http://indianfacialexpressiondatabase.com and the access can be obtained with a permission code to be obtained from the author. This database is unique in its way of presentation of all seven basic expressions.

Table 2: Facial Expression- Action units and Muscles involved in basic expressions [1]

CONCLUSION AND SCOPE The Database will be made available and is of great utility for clinicians in the fields of case-taking for Psychiatrists, psychologists, counsellors, researchers in the field of anatomy, dentistry and machine learning, teachers in assessment of students, criminal interrogation. It helps in recognizing different non-verbal communication cues that makes management of the human interactions more effective. The future work can be focused on annotating the action units, and replicating the work in a larger number of male participants, also on wider age group and capturing the live spontaneous human interactions.

ACKNOWLEDGEMENT The authors would like to thank all the participants for their participation in the project without whom the database would not have happened. Authors would like to thank Mr. Pradyumna S. Atre profoundly for technical compilation of the database.

REFERENCES

Policy for Articles with Open Access: Authors who publish with MedPulse International Journal of Community Medicine (Print ISSN: 2579-0862) (Online ISSN: 2636-4743) agree to the following terms: Authors retain copyright and grant the journal right of first publication with the work simultaneously licensed under a Creative Commons Attribution License that allows others to share the work with an acknowledgement of the work's authorship and initial publication in this journal. Authors are permitted and encouraged to post links to their work online (e.g., in institutional repositories or on their website) prior to and during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work.

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||

Home

Home